Take a trip to the TV/entertainment section in your local big box store and you may notice change is coming. Just as most people have accepted HDTV as normal TV, we are faced with the new buzz of 4K and 8K TVs and monitors. There are even rumblings of 16K TV. A trip over to the computer section and you will see various forms of QHD. Which brings us to a discussion of the latest video display resolution standards. What is 4K, 8K, 16K, and QHD?

Comparing 4K, 8K and 16K vs. QHD

These are the latest video display resolution standards. K is used as a reference to 1000 pixels wide, but is also a loose reference to 1080p HDTV. 4K is roughly equal to 4 x 1080p images, 8k is roughly equal to four 4K images. It is also interesting to note that the real roots of 4K are in digital transfer of 35mm film since 4K is the scanner resolution that can capture the grain pattern of 35mm movie film allowing digital projection to almost satisfy a classic movie enthusiast.

HD is reference to 720p HDTV. QHD is roughly equal to four 720p images. It is interesting to note that although it may seem that 1080p HDTV has become the de facto video standard, many consumers do not realize how much TV programming is still broadcasted in 720p and 720i. Just like the original 720i and 720p TV, which were first to market, the current QHD monitors can be found in both desktop and laptop computers, and will start to work into tablets in the near future. The 4K will gradually develop mostly in the high-end home theater market until real market interest and appeal is generated, volume is increased, and price point is lowered.

Video Standard Acceptance

I, for one, eventually warmed to the concept of a universally accepted video standard. Dealing with monitors set up for computer monitors vs. digital signage monitors vs. AV presentation monitors vs. CCTV monitors created a challenge for the public bid project environment. Too many options can lead to poor choices by misinformed contractors which result in poor system performance. If there could be one standard to common video standard for everything, then an AV specifier’s life could be simple. Unfortunately, when one seeks simplicity, compromises are inevitable.

Living with Compromise

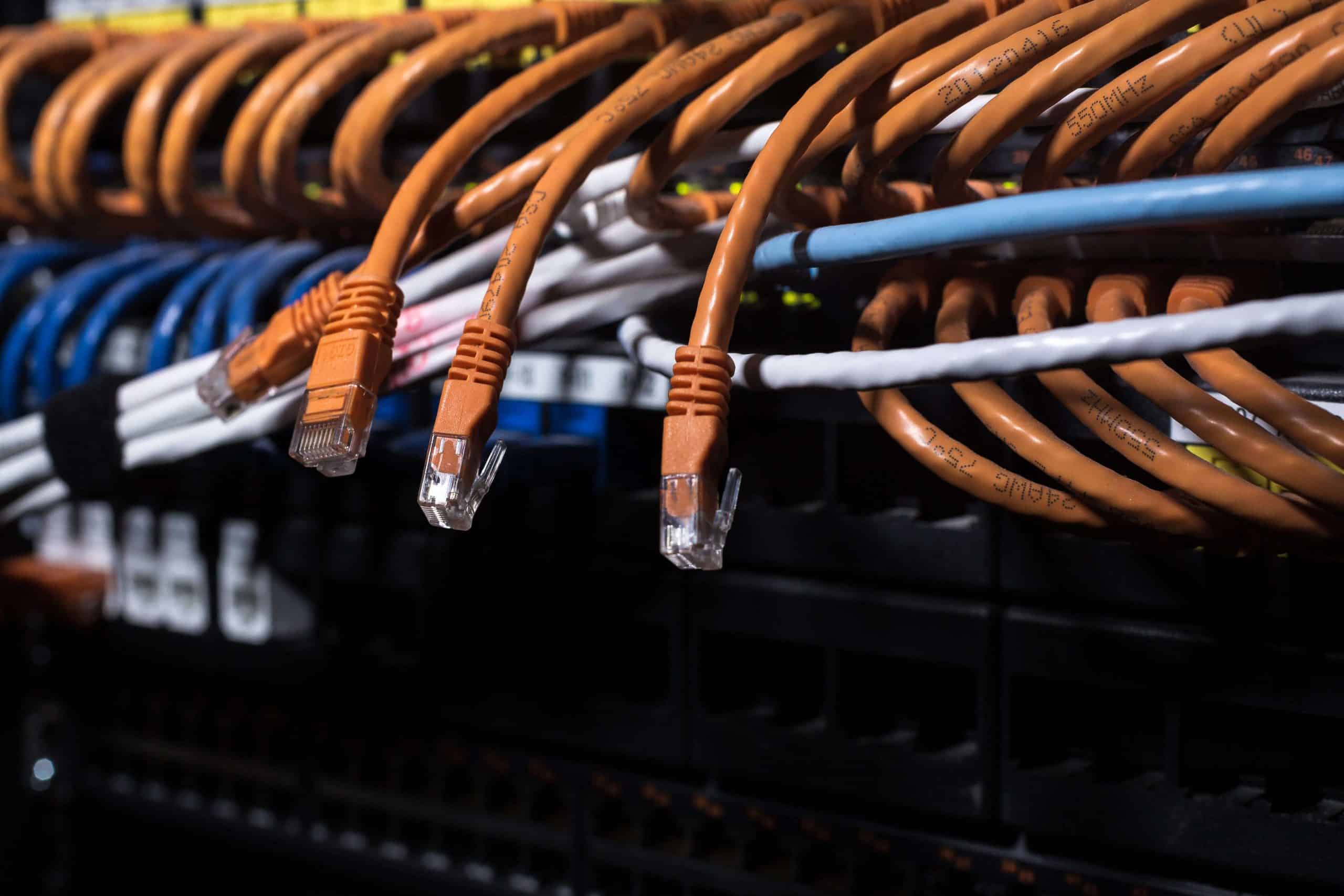

The easiest way to visualize the compromise reached in developing a universal video standard is IP CCTV video on HD monitors. The roots of IP CCTV are a blend of early video conferencing (4CIF 720×480), computer video (VGA 640×480), and SD broadcast TV (NTSC 520 lines). All of these video standards are relatively low resolution when compared with computer and HD video monitors. It should also be noted that these low-resolution video formats also require a lot of network bandwidth when compared to web and email traffic. Approximately six (3 wide by 2 high) full-size 4CIF images can fit on a 1080p monitor–good for viewing multiple images or multiplexed (mux) screens. The result when viewing a single camera image blown up to full screen is that the image becomes highly pixelated. Now, if we consider deploying 4k monitors in a CCTV viewing center using 3 megapixel video cameras, the 3 megapixel CCTV camera will be roughly equal to 1080p images. This would allow an operator to view four 1080p video streams on one 4k monitor, but viewing a single camera full screen will highly be pixilated since the display resolution is 4x the size of the original image. This phenomenon is the main reason why some people continue to comment that analog video provides better quality than IP CCTV. The end user’s issue is not the poor captured image quality, but how mismatched the display resolution is to the source resolution.

As consultants in audio/visual displays and acoustics, we routinely manage the marrying of incompatible displays into quality presentation environments.

Ask the Right Question

The important question is not if these higher resolutions are better quality than the current HD offerings, but are we correctly using the technology? For the user, the more relevant questions are, “What does the user expect as the final product as presented media?” and “How much detail does the user actually need?”

Matching monitor resolution to video source resolution will only become more critical as display resolutions continue to increase. In the past, display resolution was more tightly matched to common video source resolution. As display resolution increases and a “one-supersized-size-fits-all” approach becomes the norm in video displays, finding displays that match the native source resolution can be an ordeal, attainable only to the consultant who is a trained A/V designer.

System and hardware designers must keep in mind that the users’ perception of a larger unpixilated image is of better quality than that of a smaller high-resolution image. Sounding backwards? Then read on and be surprised by what you may first have thought.

The Importance of PPI

A critical A/V factor is the consideration of effective PPI or pixels per inch. The human eye is limited in its ability to resolve individual pixels at great distances. This allows relatively low-resolution images to look good blown up to large sizes on billboards and building sides. As an example in TV, how many viewers can tell the difference between a 720p and 1080p? The answer is, “not many,” if they are watching at a proper viewing distance of three times the screen height. At this distance, the difference between the effective PPI in 720p and 1080p displays of the same diagonal size should be minimal.

We should also keep in mind that 4K and standards higher are targeted at moving the viewer closer to larger screens. Expectedly then, 1.5 times the screen height may become the new recommended “standard.” All this while from 1995 to 2010 movie theaters actually downsized most of their screen sizes as much as 50% less than the good old “Kodachrome” days.

The interesting factor with high-resolution displays is that each new technology is effectively fitting more pixels into smaller spaces. This higher density of pixels goes back to capturing the grain of 35mm film. While higher pixel counts do allow for physically larger monitors, the effective PPI dictates that the viewable sweet spot moves closer to the monitor. So advances in display technology allow people to work closer to larger monitors without perceiving pixilation. This, however, requires that the source be of an equally high resolution as the display. If the source resolution is low like current IP CCTV cameras, then users will need to view the image at greater distances to achieve a satisfactory condition. This agrees with the “farther distance” concept first presented.

Non-Pixilated Impression

I anticipate that lower resolution display alternatives similar to displays currently used on tablet computers will be needed to address viewing of low-resolution images. Our audio/visual and acoustical designers always consider the effective PPI of source images and match those with the effective PPI of each display to maximize the effectiveness of the monitors provided. We’re always ready to address your audio, video, noise, vibration control, distribution, and media management issues with professional and experienced staff.

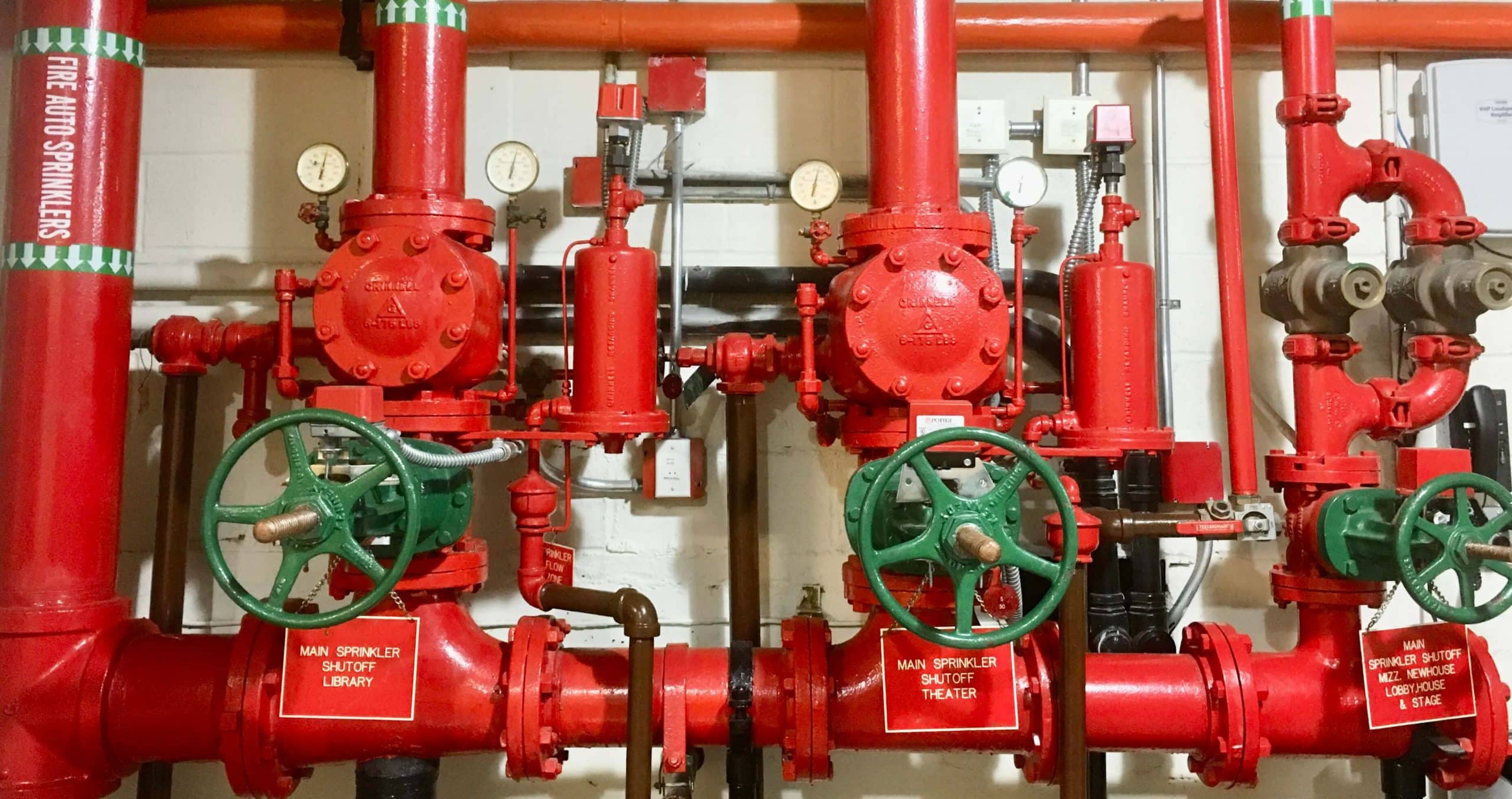

Mike Michalski, EIT, Technologies Director, is a Partner with PSE – leading infrastructure design for systems in security, telecommunications, A/V/V, data network systems, fire alarm, and lighting systems with 17 years of consulting experience.